概观

Reincubate 的Camo SDK 能够将实时视听数据从您的应用程序发送到 macOS 和 Windows 上的 Camo Studio。它通过 USB 或无线方式运行。 USB 针对低延迟和性能进行了优化,在您的应用程序中消耗尽可能少的资源。无线让您摆脱线缆的束缚。

Camo SDK 可供商业合作伙伴和合作者使用。如需更多信息,请通过enterprise@reincubate.com与您的团队联系。

目前,SDK 仅支持在 iOS 12 或更高版本的物理 iOS 或 iPadOS 设备(不包括模拟器和 Mac Catalyst)上运行的 iOS 应用程序。 Camo SDK 不具备开箱即用的 Objective-C 兼容性,因此如果您的应用主要是 Objective-C,您可能需要创建一个包装器。

API 参考

如果您想直接进入,可以查看完整的 API 参考,其中记录了 Camo SDK 提供的每个符号。否则,本文档将引导您完成与 SDK 的示例集成。

安装迷彩SDK

当您收到 Camo SDK 时,您将收到一个名为CamoProducerKit.xcframework的文件。这个独立的框架拥有你需要的一切,没有外部依赖,所以安装很容易。

- 在项目导航器中选择应用程序的项目。

- 从项目的目标侧栏中选择应用程序的目标。如果您没有看到侧边栏,它可能位于页面顶部的下拉列表中。

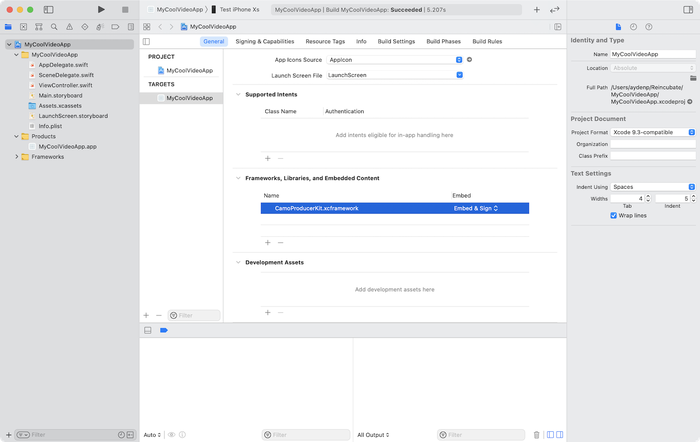

- 确保您位于“常规”选项卡上,然后向下滚动到“框架、库和嵌入内容”标题。

- 将

CamoProducerKit.xcframework文件拖放到该标题下方的文件列表中。 - 确保 SDK 设置为“嵌入和签名”。

完成后,您应该看到如图所示的框架:

与您的应用程序集成

控制 Camo 服务

CamoProducerKit 的核心是CamoController ,它为您的应用程序提供了一个用于控制 Camo SDK 的集中式界面。

在您的应用程序中,您应该初始化CamoController 的一个实例。您可以选择在应用启动时执行此操作或将其保存以备后用。 start()明确启动之前,Camo 控制器将使用很少的资源。但是,它会在初始化时准备音频和视频编码。

import CamoProducerKit class MyVideoManager { let controller = CamoController() // ... }

当您想在您的应用程序中激活 Camo 集成,启动Camo 服务以方便连接时,您可以调用start() 。完成后,您可以通过调用stop()来停止它。

controller.start() // ... controller.stop()

此代码是一个开始,但它仍然无法接受来自 Camo Studio 的新连接。

响应新连接

在您的应用程序能够接受来自 Camo Studio 的新连接之前,您需要实现CamoControllerDelegate 。通过实现这两个委托方法,您可以收到有关连接状态更改的通知,并决定是接受还是拒绝连接。

extension MyVideoManager: CamoControllerDelegate { // Upon receiving a connection request, you can decide whether or not to accept it. func camoControllerShouldAcceptIncomingConnection(_ controller: CamoController) -> Bool { // You could return false if you aren't ready to accept connections, such as during onboarding. return true } // Called whenever the connection state changes, such as if the Camo service starts or stops, or if a new connection is made. func camoController(_ controller: CamoController, stateDidChangeTo state: CamoControllerState?) { // From here, you can update your UI and other state print("New state:", state) } }

完成后,您现在应该能够打开您的应用程序,连接到 USB,并在 Camo Studio 中查看您的设备。

无线连接

要使用无线连接,用户必须授予本地网络访问权限。 Bonjour 服务应该作为 NSBonjourServices 添加到 Info.plist 中:

_camo._tcp-

_camopairing._tcp

当您调用camoController.start()时,SDK 已准备好与 Camo Studio 配对并建立网络连接。

配对

当用户单击 Camo Studio 中的“+”按钮时,配对过程开始。 Camo Studio 显示应在应用程序端扫描的 QR 码。

配对步骤:

- 在Camo Studio中点击“+”显示二维码

- 扫描二维码,获取字符串数据

- 通过调用

camoController.pairStudioWithQRCodeData(codeData) { success in }将该数据传递给 SDK -

pairStudioWithQRCodeData方法有一个回调,会告诉你配对是否成功 - SDK 将与 Camo Studio 进行配对并自动建立连接。

如果您需要取消配对(例如,如果用户确实关闭了 QR 扫描仪或应用程序),您应该调用camoController.cancelPairing()

配对有 30 秒的超时时间。如果在调用pairStudioWithQRCodeData后 30 秒内没有发生配对,则将调用pairStudioWithQRCodeData方法的回调, success值为false 。

配对设备

配对完成后,SDK 会将配对数据保存到配对设备列表中。 CamoController有 2 种方法可以处理该列表:

pairedDevices获取列表(如果您想在 UI 中显示该列表)-

removePairedDevice忘记设备

下次与Camo Studio建立无线连接只需点击Camo Studio中的“+”按钮,等待连接建立,无需再次扫描二维码。

确定连接状态

CamoController提供了一个CamoControllerState属性,该属性也包含在状态更改委托方法中,如上所示。此枚举提供对您的应用程序有用的信息,例如在 UI 中显示的已连接计算机的名称。

以下是如何更新 UI 以反映连接状态的示例:

func camoController(_ controller: CamoController, stateDidChangeTo state: CamoControllerState?) { let statusText = { () -> String in guard case let .running(serviceState) = state else { return "Camo service not running" } switch serviceState { case .active(let connection): return "Connected to \(connection.name)" case .paused(let connection): return "Paused, but connected to \(connection.name)" case .notConnected: return "Camo service running, but no connection" } }() DispatchQueue.main.async { self.statusTextLabel.text = statusText } }

发送视听数据

为了让客户端尽可能灵活,Camo SDK 不提供或控制任何捕获。相反,您负责为CamoController提供音频和视频数据。这可以通过两个简单的 API 调用来完成:

// upon receiving video from the camera or elsewhere camoController.enqueueVideo(sampleBuffer: sampleBuffer) // upon receiving audio from the microphone or elsewhere camoController.enqueuePCMAudio(data: chunk)

发送视频

如果您可以访问视频管道中的 CMSampleBuffer,将这些数据传递给 Camo SDK 就很简单了。

因此,使用基本视频 AVCaptureSession 进行设置非常简单。这是一个实际的例子。

class CaptureController: AVCaptureVideoDataOutputSampleBufferDelegate { // ... func startSession() throws { guard let camera = AVCaptureDevice.default(for: .video) else { fatalError("No camera found") } let input = try AVCaptureDeviceInput(device: camera) captureSession.addInput(input) let output = AVCaptureVideoDataOutput() output.alwaysDiscardsLateVideoFrames = true output.setSampleBufferDelegate(self, queue: videoDataOutputQueue) captureSession.addOutput(output) captureSession.startRunning() } // ... func captureOutput(_ output: AVCaptureOutput, didOutput sampleBuffer: CMSampleBuffer, from connection: AVCaptureConnection) { camoController.enqueueVideo(sampleBuffer: sampleBuffer) } }

发送视频帧时,请确保:

- 帧持续时间为 30 FPS。

- 像素格式为

kCVPixelFormatType_32BGRA。

您可以在 API 参考中查看更多详细信息。

发送音频

发送音频类似于视频,但可能需要更多步骤,具体取决于您的应用程序接收它的方式。有关示例实现,请参阅 Camo SDK 附带的演示应用程序。

发送音频数据时,请确保:

- 您的采样率为 48 kHz。

- 音频编解码器是 LPCM。

- 通道数为2。

- 位深度为 32。

- 每个音频包的样本数取决于 Studio(macOS 或 Windows),您可以从CamoController.audioSamplesRequired 获取。

您可以在 API 参考中查看更多详细信息。